AI is coming home with Foundry Local 🏠

Honestly, it's getting harder and harder to impress me these days. There's just so much new awesome stuff coming out around AI that makes it hard to keep up, but this latest release from Microsoft really caught my attention.

Companies want the latest AI innovation, but without messing with privacy, compliance or regulations.

For quite some time, I've felt this tension between being innovative and still keeping things under control, which I recently wrote about in my article Can we really trust AI? and its a topic me and Peter covered in depth at Microsoft AI Tour earlier this year in our session Trustworthy AI: Future trends and how to secure reliable solutions.

Companies want the latest AI innovation, but without messing with privacy, compliance or regulations. And that's where Foundry Local steps in. At Microsoft Build 2025, Microsoft dropped this new offering that brings powerful AI capabilities right into your own local environment. It's part of a bigger shift towards "local-first AI".

Trust me, this is something you need to keep an eye on... 👀

What is Foundry Local?

At its core, Foundry Local is Microsoft's way of letting you run and fine-tune state-of-the-art open models like Phi-3 and Mistral right on your own device.

Think of it as bringing the power of advanced AI to your data, rather than sending your data off to someone else.

It's all about giving you control, keeping things private and getting lightning-fast response whether you're on-premises or in a public or private cloud.

How do I get started?

The best part is how ridiculously easy it is to get started, even if you just want to play around on your own machine. You don't even need to be a developer!

In this example I'm using Homebrew, but you can use winget or download the installer from GitHub if you'd prefer.

First we start off by installing Foundry Local from our terminal:

brew tap microsoft/foundrylocal brew install foundrylocal

Here's a cool thing: you don't even need a Microsoft Azure account, you can just install it and start using it without any subscription or registration.

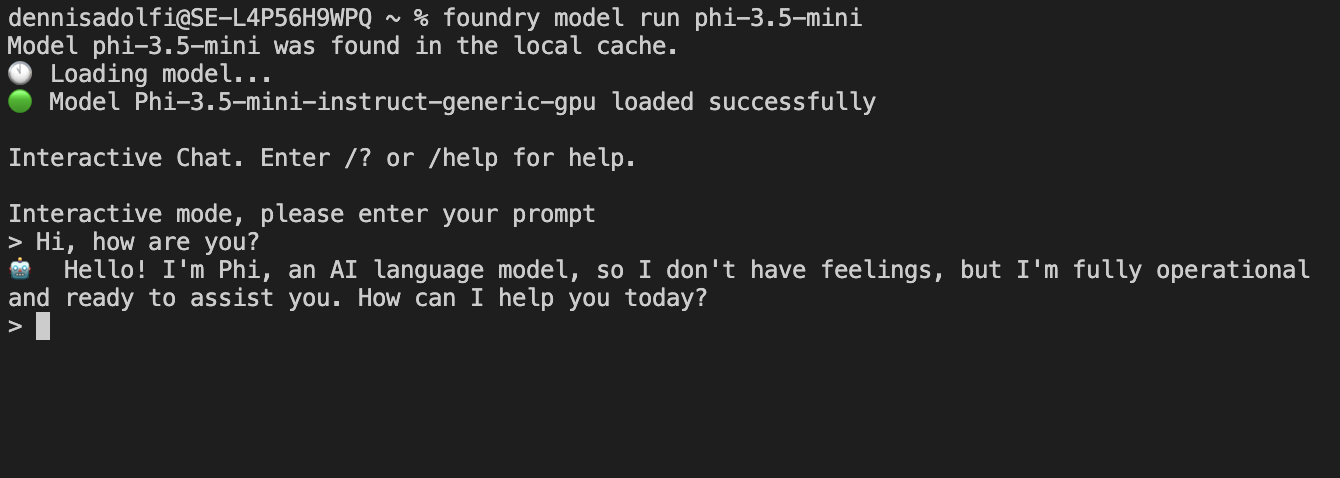

Next download a model of your choice and run the interactive chat by running this command:

foundry model run phi-3.5-mini

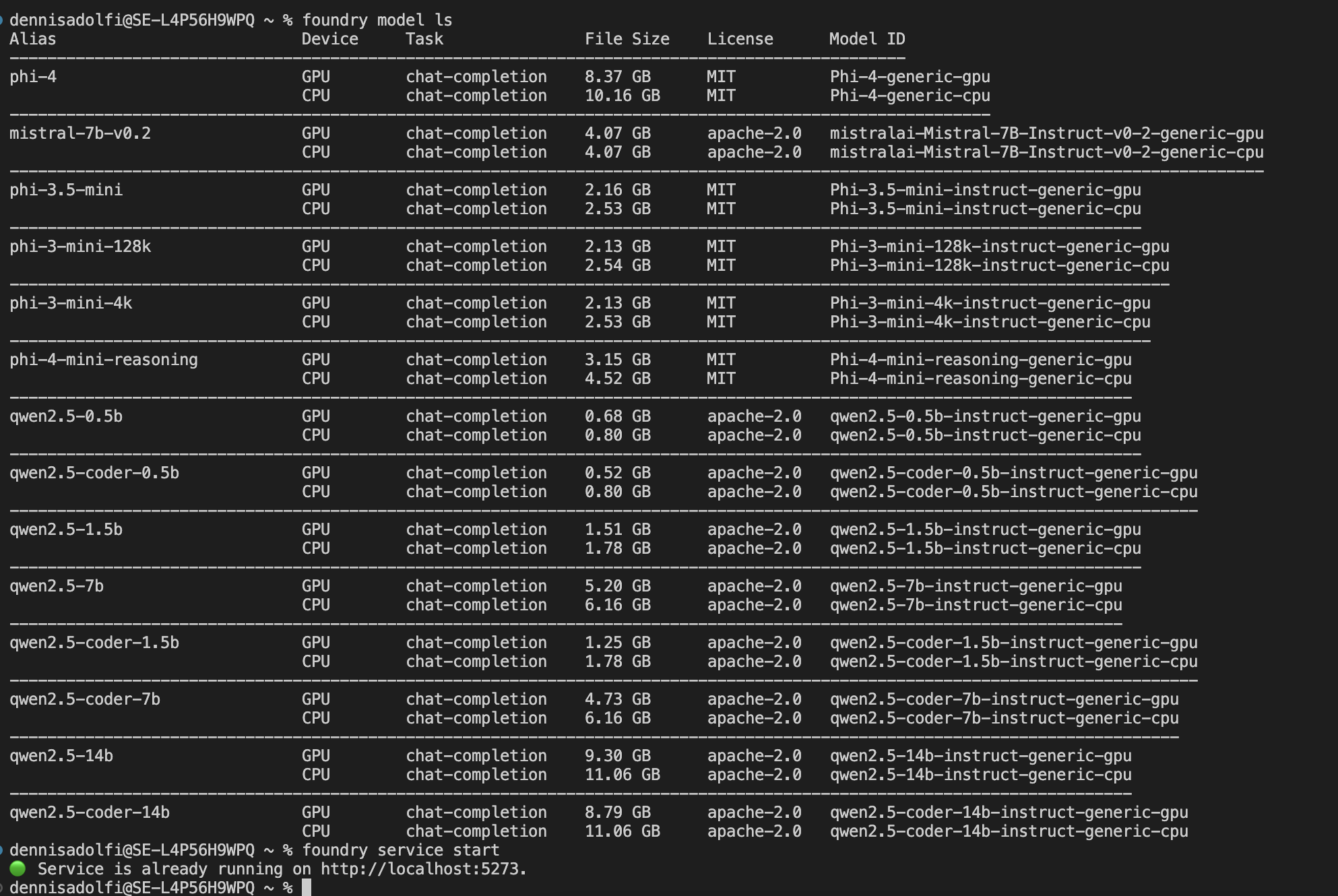

To find available models to download use the command:

foundry model ls

Once your model is downloaded and locally cached, you don't even need an internet connection to communicate with it. It's running right there on your own local device and the data never leaves the safety of your home. How awesome is that for offline work or super-secure environments?

Foundry Local also exposes a Rest API, allowing you to programmatically integrate and interact with your local models from your own applications.

To start the local API service run the command:

foundry service start

This will start the API service on http://localhost:5273 (your port might be different) and you can start communicate with the well documented Rest API.

There is also a bunch of available SDK's for C#, Javascript and Python to quickly get started building your own apps against your local model.

Final thoughts

I see Foundry Local as so much more than just a service. It's a clear signal that the AI race isn't just about bigger and faster models, it's about control, trust and data ownership.

Foundry Local is a super important piece in this big shift towards local-first AI strategies. It empowers companies to shape AI exactly how they want it without having to make sacrifices on security and privacy. Super impressive! 🌟

For more detailed info, I highly recommend checking out Microsoft's official documentation on Foundry Local:

https://github.com/microsoft/Foundry-Local