Lightning talk summary from Fika Fast Talk on Foundry Local

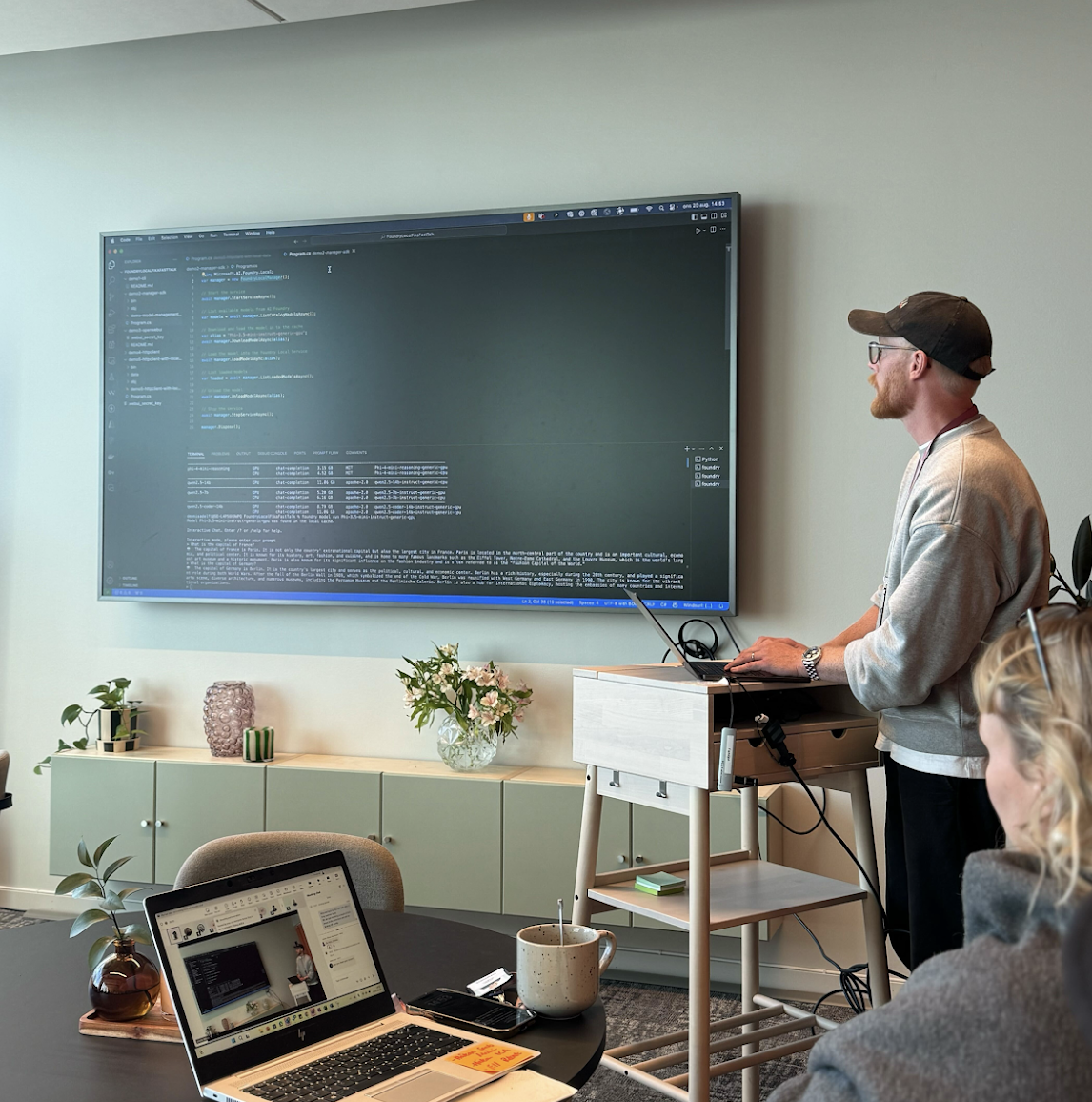

Earlier this week I had the pleasure of hosting a Fika Fast Talk at our office in Gothenburg on Microsoft's latest (preview) solution for running AI models locally on-device: Foundry Local.

We explored some of the key benefits of running models on-device, such as:

🔒 Privacy: sensitive data never leaves your local device.

🛜 Connectivity: works when network access is limited or offline.

⚡ Low-latency: improved performance without relying on cloud roundtrips.

💰 Cost Control: reduced cloud dependency means more predictable costs.

The around 40 people joined (both in person and virtually) also got a a live demo where we set up and ran Foundry Local from the terminal and the management SDK, connected via Open-WebUI and demo how to build an app that communicated with the AI service using embedded local files that never leaves the comfort of your own device.

Thanks to everyone who joined,

hope you enjoyed it as much as I did! 💖