Can we really trust AI?

This post gathers thoughts from my recent AI and the future of E-commerce tour, where I traveled across the country together with Niclas Åström to share our thoughts on how artificial intelligence might shape the future E-commerce customer experience.

Throughout these trips, I observed a clear shift in how people view AI over the cource of 8 months, especially around crucial questions regarding trust.

From Hype to Worry!

During this tour, I noticed an interesting shift in audience reactions over time. Early on, participants were enthusiastic and asked questions about new AI tools, efficiency, and higher profits. Questions like "How do we get started?" and "Which service should we use?" seemed to be top of mind.

But over the past 6-8 months, the entusiasm has faded slightly, and people have started asking more questions like, “Is AI safe?” and “Can we really trust AI systems?”. Even though our presentation remained the same throughout the tour, the conversations became more focused on potential bias, privacy, and fears that AI might become too powerful.

These concerns also come up when I talk to friends and family outside of tech (teachers, musicians, writers) who see AI as a "monster" that no one can truly control. Some even ask:

“Shouldn’t we just stop AI development altogether?”

Truth is, AI development continues worldwide and makes scientific breakthroughs each day. If those who value ethics, responsability and accountability decide to stop, it simply leaves the field open for others who may not share these moral values.

Over the past year, I’ve been doing a lot more ML and AI coding and I must say that the more I understand, the less “magical” it seems. AI is powerful, but it’s not beyond human control.

"If those who value ethics, responsability and accountability decide to stop, it simply leaves the field open for others who may not share these moral values."

It’s also important to remember that AI is essentially a reflection of ourselves, including our biases and shortcomings. The world is unequal, unfair, sometimes racist, and heavily influenced by political views. We shouldn’t act like AI itself is the problem.

Instead, we need to carefully address these issues and be very intentional about what data we feed our AI models. We want AI that reflects the society we aspire to have, not the injustices of the present. And just as we keep working on these questions in everyday life, we must do so in our AI development as well.

"We want AI that reflects the society we aspire to have, not the injustices of the present."

What are people concerned about?

Despite the great promise of AI, there are legitimate reasons for caution:

Bias: If AI is trained on incomplete or skewed data, it can produce unfair outcomes.

Transparency: Many AI models can seem like “black boxes,” where even creators have a hard time explaining how decisions are made.

Security: As AI grows more common, it can be misused through deepfakes or disinformation campaigns.

Privacy: AI often relies on large amounts of data, potentially including sensitive personal information. This raises worries about how data is stored, who has access to it and whether it could be stolen or misused.

Avoiding adoption might be the worst option

Some businesses opt to “wait and see” if AI proves itself before jumping in, but waiting too long can be risky. Nokia, once a global leader in mobile phones, was slow to respond to the touchscreen revolution despite having the resources to compete with Apple and Samsung. BlackBerry, similarly leading in smartphones, insisted users wanted physical keyboards over touchscreens.

Both giants went from top-of-mind brands to largely forgotten in a short space of time. Most young people today barely know Nokia or BlackBerry. In a similar way, businesses that stall on AI may be left behind by competitors that dive in and learn faster.

"The key is to address ethical questions while moving forward, not by standing still."

How can we address these concerns?

Fortunately, many tech leaders and communities are striving to make AI more transparent and ethical. Microsoft, for instance, has the Responsible and Trustworthy AI frameworks, offering important tools to address Security, Privacy and Safety concerns. Tools and practical resources that can help developers and stakeholders address potential injustices and better understand the rationale behind AI-driven decisions.

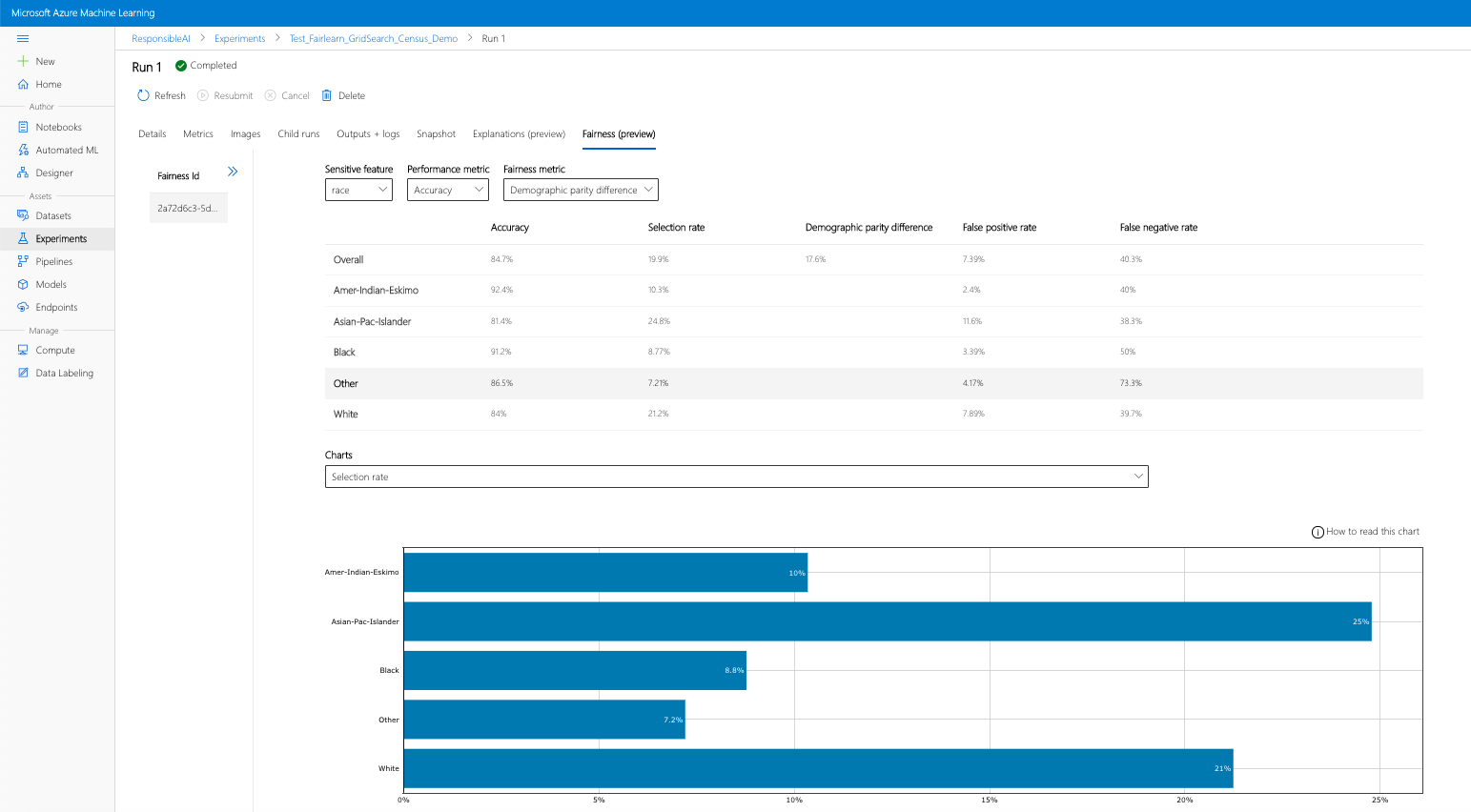

In Azure Machine Learning, for example, you can now generate fairness reports to understand how different demographics are represented in your training data and proactively address any bias or unfair predictions. You can also view explanations to understand why certain predictions were made, based on the data and feature importance.

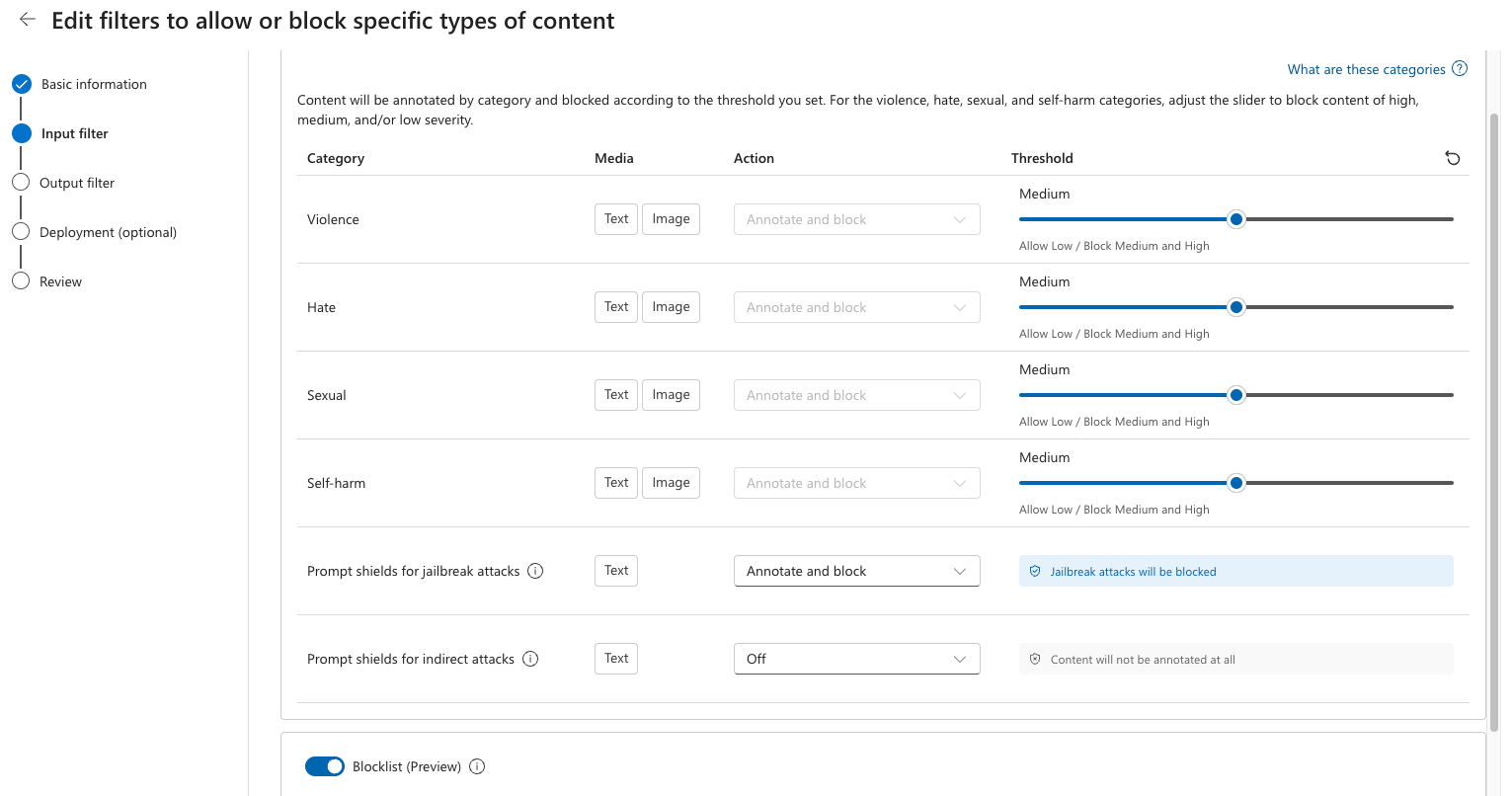

And my personal favorite is the new content filtering feature (sometimes called Content Safety Filters) in Azure AI Foundry, where you can set up filters for categories like violence, hate, sexual content, and self-harm in AI responses. These content filters take only minutes to set up, but they can make a huge difference for your users.

The are just a few of many examples of tools and services we can use to build safe and trustworhty AI solutions.

So, can we really trust AI?

At first glance, AI might seem daunting, especially if we choose not to engage with it. But with a deliberate blend of ethical awareness, tools and careful oversight, we can ensure AI evolves into an asset rather than a threat.

So, can we really trust AI? My answer is yes—if we stay involved, build systems responsibly and ensure they reflect the values we hold dear. Recognizing that issues like bias, fairness, and accountability extend well beyond the code itself is key. By thoughtfully selecting and shaping the data that AI consumes, we can guide its development toward a future that benefits everyone.