Working proactively with Responsible AI using the Threat Modeling framework

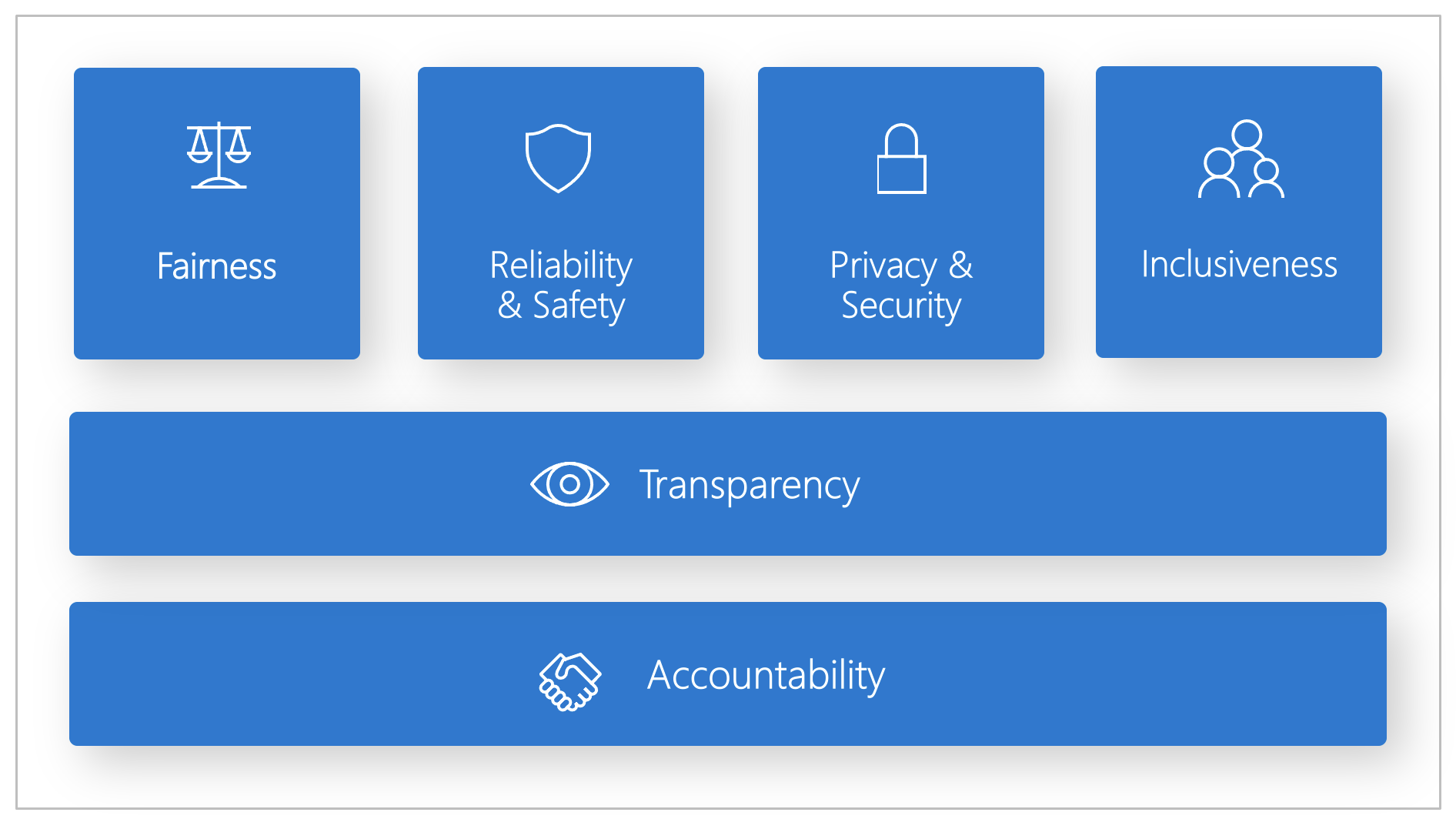

Microsoft Responsible AI is a framework developed by Microsoft to guide the ethical design, development, and deployment of AI systems. Its goal is to ensure that AI technologies are built and used in ways that are fair, transparent, secure, and respectful of privacy and human rights. Read more about the Responsible AI principles here.

At Knowit, we’ve been selected by Microsoft as a close partner to collaborate around Responsible AI through the Microsoft Responsible AI Innovation Center (RAIIC), which of course is something I’m very proud of since this is a topic very close to my heart. 💖

Something that's been on my mind for a while is how can we work more proactively with Responsible AI and address potential issues before they become real problems. How can we effectively prioritize our resources to ensure we focus on the most significant risks first?

Threat Modeling

Traditionally used in cybersecurity, Threat Modeling involves identifying and analyzing potential threats in a system, allowing developers to mitigate risks before they become real issues. Could this be a valuable tool for ensuring Responsible AI development as well?

Identifying your data flow

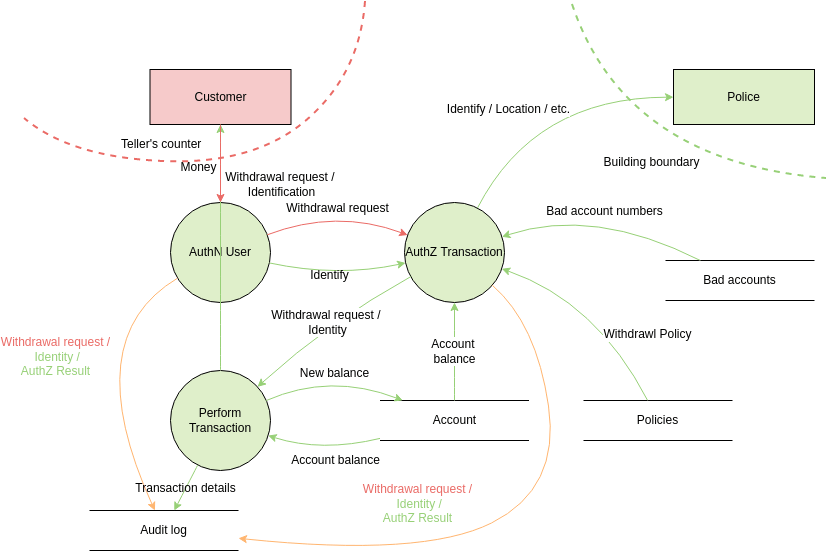

One important step in the Threat Modeling process is to map out the resources and data flow that our systems rely on.

- What data is being used, and how sensitive is the information? In an AI context, sensitive data can range from personal identifiers to behavioral patterns or financial records. A solid understanding of the data sources is crucial in ensuring privacy and avoiding unintended biases.

- Where is that data being sent? AI models often rely on cloud infrastructures and distributed systems, meaning data is processed in various locations. Depending on the nature of that data, this might raise concerns about compliance with local regulations. By identifying all data flows, we can better understand potential vulnerabilities and risks.

- How are you training AI models (Machine Learning) with this data? AI models learn by training on large datasets, but if these datasets are biased or incomplete, the outcomes will reflect those biases. We must verify the training data to ensure fairness and transparency in the resulting AI models.

Examples of a Threat Modeling Data Flow Diagram can be found here:

Risk assessment

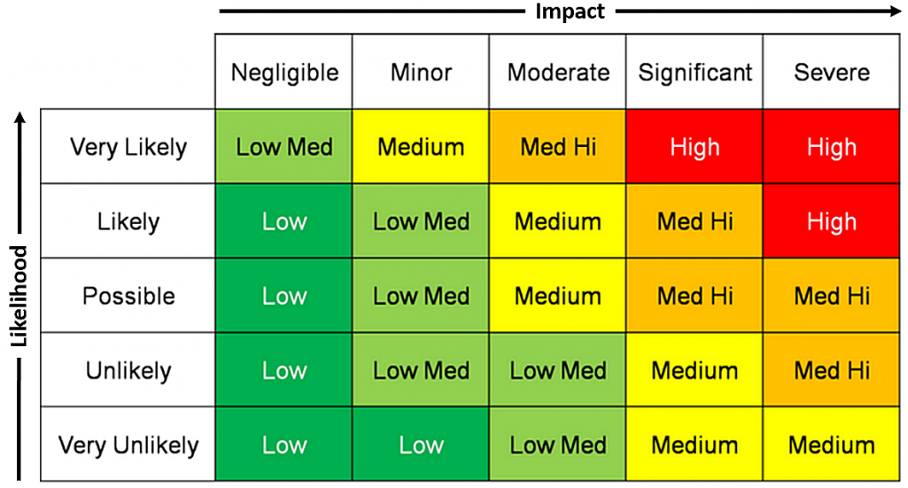

Once we have mapped out the critical resources and data flows, the next step in threat modeling is risk assessment. Each potential threat—whether related to data privacy, bias, or transparency—has a probability of occurring, along with a potential impact on users. By calculating both the likelihood and the severity of these risks, we can prioritize which threats that need our immediate attention.

For example, if an AI system uses sensitive data, the risk of a privacy breach might be high as well is the potential damage to individuals if that breach occurs. On the other hand, a biased recommendation algorithm in an e-commerce platform might have a lower impact but could still harm users by promoting unfair or discriminatory outcomes.

Prioritizing threats

The final step is to rank these threats based on their overall risk. It’s very common to use a Risk Assessment matrix such as this one to prioritize potential threats:

This allows us to focus on addressing the most significant dangers first and plan these issues in our backlog. By using the Threat Modeling framework, we can address the Microsoft’s Responsible AI principles proactively and responsibly, instead of doing nothing and just hoping they will never occur.

I think using the Threat Modeling framewotk for working proactively with Responsible AI is an area that has a lot of potential. Hope you enjoyed these ramblings and hopefully it could inspire you to work more responsible with AI. Thank you for reading this far.

Cheers! ❤️